E-commerce businesses live and die by Google rankings–and regular SEO audits are a must. Running a comprehensive SEO audit isn’t for the faint of heart; some problems are quick and easy to fix, while others take weeks or months to iron out. Knowing how to spot and fix common issues and opportunities is a good place to start.

When you run an audit, what should you be looking for? How do you resolve any issues you find?

Here are nine things to get right when auditing your e-commerce site for SEO.

1. Flush out Thin or Duplicate Content

The more content there is to crawl, the happier Google is to point traffic your way. When similar content is placed on separate pages, the traffic is split, weakening traffic to individual pages. Too much duplicate content decreases a site’s overall SEO. Duplicate content also puts you at risk of an algorithm penalty, which are some of the hardest SEO trouble spots to fix because you won’t get notified. You’ll just see a sudden sharp drop in traffic the same time as an algorithm update – which can be a year or more apart: that’s a long time to wait for bad traffic to improve, especially in e-commerce where traffic is directly correlated to sales.

Duplicate content is one of the most common problems in e-commerce and SEO, therefore, it will play a big part in this post.

How do sites wind up with duplicate content?

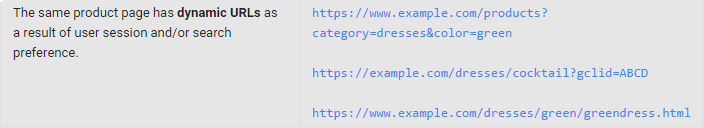

It’s easy to have duplicate content from one page to another within your site. It happens even if you’ve carefully avoided duplicate content when creating product description and other site elements. When a customer follows a different route to a page on your site, from a menu rather than a Google search, a new URL is created. That URL points to a page that’s otherwise identical to all the other copies of the same product page, and Google isn’t yet sophisticated enough to let you off the hook – it will treat you like any other site with duplicate content and penalize you.

How can you avoid this?

- Product descriptions should always be your own. Never use the manufacturer’s lifeless, repetitive descriptions.

- Use robots.txt to seal off any repetitive areas of a page, like headers and footers, so they don’t get crawled.

- Use canonical tags (see below) to prevent accidental duplication.

There aren’t many tools that help you accurately identify and get rid of duplicate content without manual inputs. However, you can use a tool like DeepCrawl to inspect website architecture (especially HTTP status codes and link structure) and see if you can come up with automated quick fixes to URLs or HTML tags within the code.

In terms of content, DeepCrawl identifies duplicate content well and ranks pages by priority – a critically useful feature for e-commerce sites that have extensive product lines and use faceted navigation.

2. Use Canonical Tags

Canonical tags tell Google which parts of a website are “canonical” – a permanent part of the site.

Two things happen when multiple users with multiple routes to a page create multiple URLs that all point to the same page. First, Google sees them as different pages and splits traffic between them, and your page authority nosedives. Second, Google indexes each page as a duplicate.

Use canonical tags and Google will ignore those pages, rather than indexing them and flagging your site for duplication.

What does it look like when you get canonicalization wrong?

In Apache, URLs may appear like this:

http://www.somesite.com/

http://www.somesite.com/index.html

http://somesite.com/

http://somesite.com/index.html

In Microsoft IIS, you might see something like:

http://www.somesite.com/

http://www.somesite.com/default.aspx

http://somesite.com/

http://somesite.com/default.aspx

You might also see different versions with the same text capitalized differently.

If each page is the same, but Google counts them as unique due to their different URLS, traffic will be split four ways. A page that gets 1000 visitors shows up in Google’s algorithm as receiving 250, while the “other three pages” get the additional traffic.

So much for why. What about how?

Using canonical tags is an “opt-in” system, so you’re tagging the pages you want Google to index. It’s fairly simple. The following is a common scenario for large retail sites:

3. Balance Link Equity and Crawlability

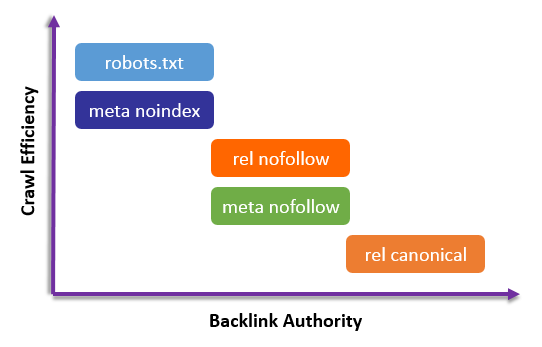

Crawl efficiency and link equity aren’t either/or, but they can wind up treading on each other’s toes if you’re not careful. Here’s how they fit together:

If you have a bunch of pages that you don’t want indexed, because they’re slowing down crawling, you can use robots.txt instructions like noindex anddisallow to stop them from being crawled. That saves your crawl budget for pages that you actually care about. However, if you have high authority links on those pages, they won’t get picked up by Google.

There is a fine line between disallowing indexing, allowing Google bot to crawl through pages, passing link juice, and canonicalization. You need to understand the various ways in which these are implemented and the trade-offs among them, and walk each of these tightropes carefully.

4. Paginate Categories

Even smaller e-commerce sites face pagination issues thanks to product pages. Pagination is a two-edged sword. If you’re going to have a site that anyone can find their way around, pagination is a must. At the same time, pagination can confuse Google. You can run into duplicate content issues (again), from paginated and view-all versions of the same page; backlinks and other ranking signals can be spread out among paginations, diluting their effects. Additionally, in very large categories, crawl depth can be an issue too.

You can use canonical tags to identify the view-all page as the “real” one for indexing purposes. That should save you from duplication issues, but there are some shortcomings to this approach. Multi-product categories and search results won’t have view-all pages due to the large size requirement, so you can’t canonicalize in that case. (If it is an option, though, Google assures us it will consolidate backlinks too.)

You can also use rel=“next” and rel=“prev” HTML markup to differentiate paginated pages; make sure if you do this you don’t canonicalize the first page. Instead, feature numbers in your URLs and make each page its own canonical tag to prevent duplication issues. This technique is likely to be more effective when there are a large number of pages with no view-all, i.e. most e-commerce sites. Google won’t follow your tags instructions as closely if you go this way, but if you have thousands of pages, this might be the best option.

When doing an audit on your e-commerce site, look for unlinked pages with pagination that is broken or leads nowhere. Also, look for standalone pages that need to be paginated but aren’t. Bear in mind that these are separate from those that are canonicalized.

5. Keep Your Sitemaps Fresh

If Google has to crawl thousands of pages to find a few dozen new product pages, it’s going to affect crawl efficiency – and your new products will take longer to show up in search. Sitemaps can help Google find new content faster and deliver it to users. Indexing first will result in increased authority, leading to a competitor advantage where you may rank ahead of your competitors with essentially the same product.

In addition to getting new pages into search results quickly, sitemaps offer you other advantages. Your Search Console features information on indexing problems, giving you insight into site performance.

When you’re building your sitemap, there are a few things to keep in mind. If you do international business, and your site is multilingual, you’ll need to usehreflang – to let Google know where a user is located and which language they need.

Watch out for content duplication within your sitemap: it won’t cost you anything with Google, but it will make site performance models unreliable. If you have mobile and desktop versions of your site, use rel=“alternate” tags to make that clear.

One of the quickest and yet most comprehensive ways to generate a sitemap is to use the free Screaming Frog SEO Spider, which allows you to include canonicalized and paginated pages as well as PDFs. It also automatically excludes any URLs that return an error, so you don’t have to worry about redirects and broken links popping up in your sitemap.

6. Use Schema Markup

Schema is:

“HTML tags that webmasters can use to markup their pages in ways recognized by major search providers… improve the display of search results, making it easier for people to find the right web pages.”

Schema doesn’t generate SEO benefits directly – you won’t get a boost from Google for using the tags. You also won’t be punished by Google for leaving them out. Their function is to make search results more relevant and help you serve your users better. You get indirect SEO benefits by having a better UX and a stickier site, thanks to more relevant traffic.

Schema markup means things like customer reviews and pricing show up in SERP, as it allows you to extend and define the data that’s displayed in search results.

One data type supported is products, which should have every retailer sitting up a little straighter. Google’s Data Highlighter tool will talk you through how to do everything from organizing pages into page sets, to flagging the content you want to be available in SERP. The only catch is, you need to have claimed your site in Google Search Console.

7. Simplify Your Taxonomy for Easy Navigation

Taxonomies affect crawl depth, load speed, and searchability. The best way to score better for all of these is to have a “shallow, broad” taxonomical structure, rather than a “narrow, deep” one.

What’s the difference? On a chart, deep narrow taxonomies have more layers – more questions to answer, or choices to make, before you reach your destination. Wide, shallow taxonomies offer multiple points of entry, more cross-links and less distance to product pages.

This matters to users as a rise in the number of clicks to reach content damages user experience. It also harms SEO directly (Google is increasingly taking UX into account now) by making your site harder to crawl, especially if it’s a big site to begin with.

A wider, more open taxonomy is easier to crawl, easier to search and easier to browse, so it’s recommended for e-commerce sites.

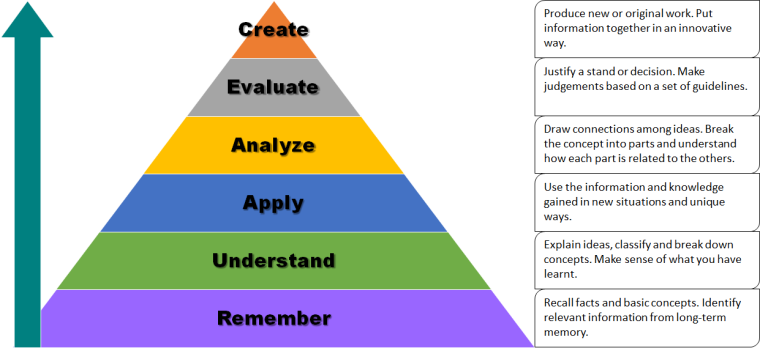

Here’s Bloom’s Taxonomy guide, to help with content creation in case you’re still evaluating how to structure your categories and product descriptions:

8. Speed it Up – Even More

Page load time isn’t a tech issue. It’s a customer service issue – and a bottom line issue. Right now, getting good load times will give you a substantial edge over your competition. Why? According to Kissmetrics, the average e-commerce website takes 6.5 seconds to load – and a 1-second delay equates to a 7% drop in conversions. Surely e-commerce sites are getting faster to cope, then? Nope. They’re slowing down, by 23% a year. This should be seen as an opportunity take the lead. Amazon found out that it increased revenue by 1% for every 100 milliseconds of improvement.

How should you accelerate load speeds?

There are a lot of simple tweaks you can make to your website in order to speed up loading times and get on the good side of Google. Start with static caching. If your site uses dynamic languages like PHP, they can add to lag times. Turn dynamic pages static and web servers will just serve them up without processing, slashing load times.

CSS and JavaScript might need your attention too. Try tools like CSS Compressor to trim down the style sheet element of pages and make them load faster.

And don’t forget images. While everyone realizes the advantage of smaller file sizes, specifying images” height and width are often overlooked. When you visit a URL, the browser downloads all the data on the page and begins rendering it together. If image sizes haven’t been specified, the browser has no idea how large they’re going to be until after they’ve fully downloaded. This delay forces the browser to “repaint” the layout.

Finally, if you have a big, content-rich site (you’re in e-commerce so I’m guessing you do), try using multiple servers so your content is loading several items at a time.

9. Monitor All Pages for Errors

Checking your web pages for common errors is one of the simplest ways to maintain strong SEO. Common errors include:

- HTML validation errors: These can cause pages to look different on different devices or in different browsers, or even to fail altogether. TheW3C Markup Validator will point you in the right direction.

- Broken links: These damage user experience and lead to abandonment and poor reviews, harming your reputation.

- Missing or broken images: If images aren’t working deep in a hundred thousand page site, would you know?

- JavaScript errors: Choppy loading is the least of your problems if you have serious, undiagnosed JavaScript errors. They can actually make access to some parts of your site impossible. They’re potentially a security risk as well.

Over to You

Fixing errors like duplicate content and broken links can deliver substantial benefits. Address more technical matters like canonicalization and schema, and your e-commerce site should see improved search rankings, more real estate in the SERPs, and the jumps in traffic and revenue that go with it!

Source:https://www.searchenginejournal.com/9-things-validate-auditing-e-commerce-sites-seo/158361/

Source:https://www.searchenginejournal.com/9-things-validate-auditing-e-commerce-sites-seo/158361/

No comments:

Post a Comment